Hello,

Being stuck inside had me bored, so back in April I restarted a project I dabbled in last August that tried to use machine learning to predict who will win a Pokemon battle. Over time I realized you could do more and more with machine learning, so eventually the project expanded to predict what players will do. And after a couple of months, I ended up with a few really good working models that I'm releasing (hopefully) today in a Chrome extension known as Pokemon Battle Predictor!

EDIT: The extension is live!! You can install it from the extension store for the following browsers:

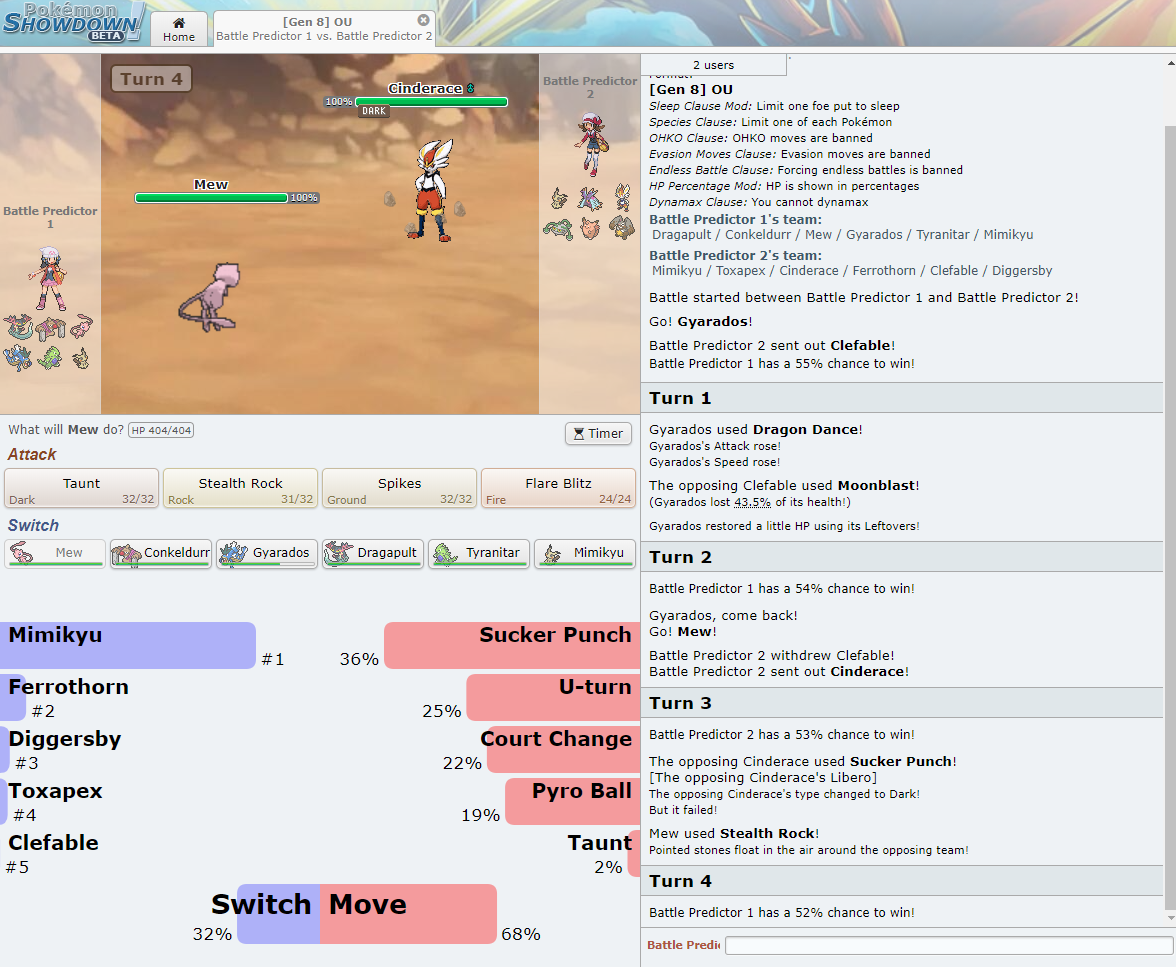

On the surface, Pokemon Battle Predictor uses 4 TensorFlow.js models trained on 10,000+ battles to tell you the current probability of:

The chance of the player to win is listed in the battle log after every turn. Key word here is chance, as there is a difference between trying to predict what will happen next and the chance of something happening. The difference is the former is judged by the accuracy of each prediction while the latter is judged by whether the outputs of a specific chance are accurate "that chance" of the time. I went for predicting chance as this is way more useful for any kind of game and this one in particular is way too random to find anything but chance.

How does it work?

All the machine learning models start with the same data: battle replays. I downloaded all the replays for gen8ou battles from June 3rd to June 13th and kept the ones where both players have a rating of 1250 or higher. The dates were chosen to reflect the most recent meta-game (more on that later) and the rating was kinda arbitrary as I wanted to make sure the battlers knew what they were doing but also wanted to keep as much data as possible. All the models also have the same inputs which are taken for each turn of each battle:

Predicting who will be switched in requires first training a model where the training output is a list of all Pokemon where the Pokemon that switched in is marked as the correct answer. After that is trained, a layer is added on the end of that with the same set of outputs so the model can learn which Pokemon are brought in under similar situations. For example, the first layer may only give Seismitoad a large chance to be switched in, but the second layer has learned a high chance for Seismitoad should also mean a high chance for Gastrodon and Quagsire as well.

For predicting what move they will use, the model was trained where it would predict the chance of every move being used, then base on which Pokemon is in, would multiply those chances by 1 if the move has been used by that Pokemon in this battle before or the usage percent for that from the most recent moveset usage stats. This is done to both teach the model what moves a Pokemon can use and the likelihood of the Pokemon having the learnable move.

I know that wasn't a clear explanation, so if you want to learn more I can answer any questions you have. I just want to get this out there now so clarity wasn't top of mind.

How well does it work?

The models were tested using a separate set of data to see how often results of specific chance are correct and the overall accuracy of the model.

Each model has a 95% confidence interval of 8%, which means the chance it gives for something to happen is within 8% on either side of the real chance. For a game and players as random as it's trying to predict without full information on either team, those are very good results and means the chances the model returns are accurate. I tried a whole bunch of different methods (which to spare this post being an essay I won't talk about now) and getting that confidence any lower will be an ordeal.

And even though I only cared about finding the chance, the models are pretty accurate too. For any given turn in a battle, the chance to win is 67% accurate (with that number increasing the further into the battle you go). All the other models have an accuracy of ~65%. However, from running the Chrome extension on battles the last couple of days, it feels like the prediction of who will switch in is either always right or always wrong for a given battle, but that's just anecdotal evidence.

The biggest caveat to all of this has to do with how this reflects the current meta-game. First off, it was made to work with how people play in OU in mind and nothing else, so it shouldn't be used on other tiers. It might work fine in UU and decent in RU, but anything else would just be luck. It's very easy for me to make models for the other tiers as all I'd need to do is download the replays, but because of the royal pain that is getting replays more than a day old, I'm not going to do that soon. The reason I'm waiting is the obvious other downfall of this: DLC is about to change everything. That does mean the extension as is now will not work once all the DLC is added and may take a bit before the meta-game is stable enough to predict again. That's why I'm launching my extension now (or as soon as the Chrome store is done reviewing it) so people can use it and see what they think before I have to wait a month to update it.

What comes next?

So obviously my next big objective is making it work post DLC, but in the meantime I'll probably get it to work on the National Dex meta-game. Beyond that, my next goals are:

I first found this forum a few days ago when looking for a way to tell people about this and only while writing this noticed a few people have tried this before. If I'd seen that any earlier, I would've helped them, but alas I'm open to talking to people about helping on this project.

Once it's available on the Chrome Store, I'll post here again. But if you just look up "Pokemon Battle Predictor" in the Chrome Store in a couple of hours, it should be there.

And one more thing: You might think to yourself "if you can find the chance to win for any turn and predict your opponent's next move, couldn't you also use this to make a good Battle AI?". Yes, yes you could, and I only know that because I did, but I'll talk more about that later.

Being stuck inside had me bored, so back in April I restarted a project I dabbled in last August that tried to use machine learning to predict who will win a Pokemon battle. Over time I realized you could do more and more with machine learning, so eventually the project expanded to predict what players will do. And after a couple of months, I ended up with a few really good working models that I'm releasing (hopefully) today in a Chrome extension known as Pokemon Battle Predictor!

EDIT: The extension is live!! You can install it from the extension store for the following browsers:

- Firefox: https://addons.mozilla.org/en-US/firefox/addon/pokemon-battle-predictor/

- Chrome: https://chrome.google.com/webstore/detail/pokemon-battle-predictor/efpcljpklgonmgjeiacfbfbpcecklfni

On the surface, Pokemon Battle Predictor uses 4 TensorFlow.js models trained on 10,000+ battles to tell you the current probability of:

- Who will win the battle

- Your opponent switching out or choosing a move

- Which move they will use if they stay in

- Which Pokemon they will switch to if they switch

The chance of the player to win is listed in the battle log after every turn. Key word here is chance, as there is a difference between trying to predict what will happen next and the chance of something happening. The difference is the former is judged by the accuracy of each prediction while the latter is judged by whether the outputs of a specific chance are accurate "that chance" of the time. I went for predicting chance as this is way more useful for any kind of game and this one in particular is way too random to find anything but chance.

How does it work?

All the machine learning models start with the same data: battle replays. I downloaded all the replays for gen8ou battles from June 3rd to June 13th and kept the ones where both players have a rating of 1250 or higher. The dates were chosen to reflect the most recent meta-game (more on that later) and the rating was kinda arbitrary as I wanted to make sure the battlers knew what they were doing but also wanted to keep as much data as possible. All the models also have the same inputs which are taken for each turn of each battle:

- Each Pokemon's current HP

- Each Pokemon's statuses

- Which Pokemon are in on either side

- Stat boosts on either side

- Last used move on either side

- The volatile and side effects on either side

- Weather and pseudo-weather active

- The "Switch Coefficient" for the Pokemon who are in

- How often one Pokemon switches out when then the opposing Pokemon is also in

Predicting who will be switched in requires first training a model where the training output is a list of all Pokemon where the Pokemon that switched in is marked as the correct answer. After that is trained, a layer is added on the end of that with the same set of outputs so the model can learn which Pokemon are brought in under similar situations. For example, the first layer may only give Seismitoad a large chance to be switched in, but the second layer has learned a high chance for Seismitoad should also mean a high chance for Gastrodon and Quagsire as well.

For predicting what move they will use, the model was trained where it would predict the chance of every move being used, then base on which Pokemon is in, would multiply those chances by 1 if the move has been used by that Pokemon in this battle before or the usage percent for that from the most recent moveset usage stats. This is done to both teach the model what moves a Pokemon can use and the likelihood of the Pokemon having the learnable move.

I know that wasn't a clear explanation, so if you want to learn more I can answer any questions you have. I just want to get this out there now so clarity wasn't top of mind.

How well does it work?

The models were tested using a separate set of data to see how often results of specific chance are correct and the overall accuracy of the model.

Each model has a 95% confidence interval of 8%, which means the chance it gives for something to happen is within 8% on either side of the real chance. For a game and players as random as it's trying to predict without full information on either team, those are very good results and means the chances the model returns are accurate. I tried a whole bunch of different methods (which to spare this post being an essay I won't talk about now) and getting that confidence any lower will be an ordeal.

And even though I only cared about finding the chance, the models are pretty accurate too. For any given turn in a battle, the chance to win is 67% accurate (with that number increasing the further into the battle you go). All the other models have an accuracy of ~65%. However, from running the Chrome extension on battles the last couple of days, it feels like the prediction of who will switch in is either always right or always wrong for a given battle, but that's just anecdotal evidence.

The biggest caveat to all of this has to do with how this reflects the current meta-game. First off, it was made to work with how people play in OU in mind and nothing else, so it shouldn't be used on other tiers. It might work fine in UU and decent in RU, but anything else would just be luck. It's very easy for me to make models for the other tiers as all I'd need to do is download the replays, but because of the royal pain that is getting replays more than a day old, I'm not going to do that soon. The reason I'm waiting is the obvious other downfall of this: DLC is about to change everything. That does mean the extension as is now will not work once all the DLC is added and may take a bit before the meta-game is stable enough to predict again. That's why I'm launching my extension now (or as soon as the Chrome store is done reviewing it) so people can use it and see what they think before I have to wait a month to update it.

What comes next?

So obviously my next big objective is making it work post DLC, but in the meantime I'll probably get it to work on the National Dex meta-game. Beyond that, my next goals are:

- Get it to work with double battles (probably for VGC rules)

- Add other meta-games

- Make it agnostic to a meta-game / specific Pokemon

I first found this forum a few days ago when looking for a way to tell people about this and only while writing this noticed a few people have tried this before. If I'd seen that any earlier, I would've helped them, but alas I'm open to talking to people about helping on this project.

Once it's available on the Chrome Store, I'll post here again. But if you just look up "Pokemon Battle Predictor" in the Chrome Store in a couple of hours, it should be there.

And one more thing: You might think to yourself "if you can find the chance to win for any turn and predict your opponent's next move, couldn't you also use this to make a good Battle AI?". Yes, yes you could, and I only know that because I did, but I'll talk more about that later.

Attachments

Last edited: